The Entertainment & Performing Arts Industry Conference (EPIC) is a global online entertainment conference held across 24 hours on 10th January 2022. Spanning four categories of Perform, Create, Design, and Produce, the event has been host to a broad spectrum of entertainment professionals sharing their stories and wisdom across all industries and areas of the Arts. With events running concurrently across the 24-hour period creating a treasure trove of content (that’s available to dive into on demand in the coming month), we reflect on some of the highlights from the event.

Constantly fuelled by the question of “What’s Next?”, Istanbul composer Mehmet Ünal and artist/producer Kerem Demirayak explore the fascinating creative space of music and sound composition. Fusing A.I. algorithms, traditional and invented instruments, new media and the Metaverse, this remarkable, interactive mainstage session explores the integration of traditional music design with innovative technologies creating human connection and immersive experiences. Their work has received numerous awards and recognition around the world. This riveting session will not only give EPIC audiences a peak into their process and performance but will also allow the audience to witness and interact with a live composition created in real time!

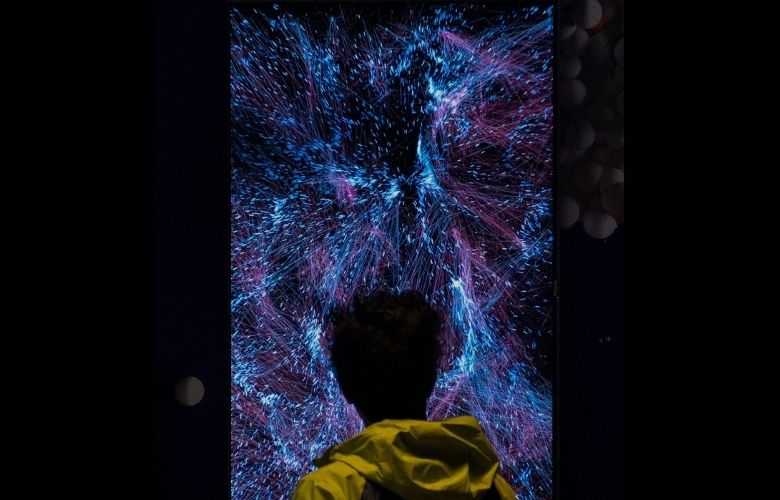

One of my personal highlights from the EPIC event was undoubtedly watching Mehmet Ünal and Kerem Demirayak performing together across the globe in the metaverse. The duo, both wearing VR oculus that was also shared for us to see on screen, showed their visuals – with a square of 3 x3 rows of notes for Mehmet in front of him, with another 9 notes in the same layout to the left, and another to the right. We also viewed Kerem appearing from his home, and could see a screen sharing his oculus view of a traditional keyboard.

Both musicians began playing synthesizer instrument sounds using the movements of their hands, creating a duet on their respective virtual playing of their squared rows and keys – remember they are not ‘physically’ touching anything, it is the receptors and sensors picking up their movements that indicate a note is ‘struck’.

The introduction of soundscape features including electronic ambient noise, sustained notes, and risers join the ensemble next, before an electronic beat is introduced with pre-recorded sound effects fading in and out.

Mehmet then takes off his headset and attends to the laptop on stage, which is next to a theremin, and picks up a real trumpet before stopping the beat and playing the acoustic instrument into a microphone, while Kerem continues playing on the keyboard layout of VR notes, and the ambient soundscape noises continue to drift in and out in the background. The beat comes in once more, Mehmet returns to the laptop and theremin before everything drifts out and Mehmet takes a solo trumpet section to complete the piece in this ‘reality’.

Powered by IBM Cloud™️; Mehmet Ünal aims to create the new aesthetics of “connectedness” using multimedia and immersive experiences that offer the interconnected world of Internet of Things (IoT) and Artificial Intelligence (AI).

The Internet of Arts (IoA) represents a coalescence of objects that share a collective “connectedness” in natural and human-made worlds. The enablers of the IoA concept are powerful IoT technologies like IBM Watson supercomputer cluster, motion sensors, WiFi-enabled micro-controllers, LED displays, and back-end servers. In particular, the sensor inputs of Leap Motion are fed to Deep Learning based AI and Machine Learning (ML) algorithms that are integrated in the IBM Cloud. Node-Red framework in the IBM Cloud allows us to create a connected network of art objects and human-computer interfaces, and multimedia frameworks such as Max/MSP for audio processing, server-side programming and data processing.

Through the complex network of technologies, we invite our audience to perform by controlling parameters of the analogue synthesizer Moog Slim Phatty and observe the performance through audio-reactive visuals in real-time.

Following their performance, the musicians joined a conversation about their work, explaining that in creating new metaverse technology they look to the past, at the orchestral setup of individuals together forming a collective sound. The theremin was created out of a soviet physics experiment on electromagnetism in the 1920s, and created a new era of innovation in music.

Integrating the theremin with the orchestra reinvented aesthetics after this point – the electric current and its use have dominated music history for the past century, generating sound through oscillators, then MIDI as a communication language came in, however these were closed systems and were not sharable as in multiple people could not play them simultaneously as they were offline.

Mehmet and Kerem believe that net art is the potential for a new age of art creation, and that we could soon welcome cloud-based de-centralised instruments. The musicians ask us to imagine an instrument that could play along with you in a similar way to visualising an additional 19 arms playing also. They give us the example of 100 people playing together in an orchestra, so imagining 100 people playing one instrument with VR, AR, XR in the metaverse.

Music DAW’s have introduced the ability to play with others across a network, with the launch of Ableton Link, GarageBand Jam Session in recent years to name but a few, however these work with traditional ‘normal’ instruments played together with hands striking the notes in the usual way, and without the VR metaverse aspect or sensor-based interactive elements.

Mehmet and Kerem introduced us to TILDE meta instruments which is their innovative new way of making tools and instruments. With their method, they used an analogue MOOG synth to push the sound (and perhaps was a choice as a homage to Robert Moog who popularised the Theremin outside of Russia back in the 1960s). The duo explained that they made 19 different parameters with different sounds, along with the 3D space for recognising the sounds and corresponding algorithms.

We are excited to introduce TILDE the new generation of meta instruments and musical experiences in the Web3.0 and Metaverse

These instruments will embody various technologies and capabilities in multiple XR mediums. We are aiming to build an interactive and blockchain integrated instrument you could own and played by hundreds of people simultaneously via 5G technology.

We hope to build this project with the support of XRhub Bavaria and had the chance to showcase it with 1e9_community in Deutsches Museum Munich.

Delving into the technical aspects of this creation and the four-step process from machine learning onto the cloud integration, to the internet of musical things, and finally to the metaverse can seem like a lot to take in at first.

Following the process of musical and computer language in executing these four steps can make a little more sense in understanding that MIDI is converted to computer language twice – becoming Javascript, then MQTT, before being placed on the Blockchain.

|

Machine Learning |

Cloud Integration | Internet of Things | Metaverse |

|

Leap motion wekinator MIDI |

Cloud provider OSC Javascript |

MQTT (IoT Protocol) node red |

Blockchain WebVR WebAudio Meta Synths Crypto Synths |

Additionally, the duo have kindly added their patching map and more technical information to assist those who want to understand more about the process, particularly in following the sensor elements. The process is explained more fully as:

“Another framework supporting sensor-based interactive performance is Wekinator and Leap Motion which seeks to extend music language toward gesture and visual languages, with a focus on analyzing expressive content in gesture and movement, and generating expressive outputs.

In particular, the sensor inputs of Leap Motion are fed to Deep Learning based AI and Machine Learning (ML) algorithms that are integrated in the IBM Cloud. Node-Red framework in the IBM Cloud allows us to create a connected network of art objects and human-computer interfaces, and multimedia frameworks such as Max/MSP, Notch, TouchDesigner, and custom software written in Python.”

The topic of music in the metaverse really is a fascinating area, whether looking through the view of IBM and technology creators, from the perspective of a musical performer or composer, or simply as an audience member appreciating the result. There is undoubtedly a huge amount of potential for more things to come out of this area – whether as lots of instruments being played together, or in lots of people playing one instrument together in the metaverse. The possibilities are endlessly exciting.

Accessibility At The Smith Center Series: Part One

James “Fitz” FitzSimmons Interview: The Boys In The Band On Netflix

Michelle is a musician and composer from the UK. She has performed across the UK and Europe and is passionate about arts education and opportunities for women and girls.

Read Full Profile© 2021 TheatreArtLife. All rights reserved.

Thank you so much for reading, but you have now reached your free article limit for this month.

Our contributors are currently writing more articles for you to enjoy.

To keep reading, all you have to do is become a subscriber and then you can read unlimited articles anytime.

Your investment will help us continue to ignite connections across the globe in live entertainment and build this community for industry professionals.